Perception Is All You Need

- David Razdolsky

- Jan 19

- 7 min read

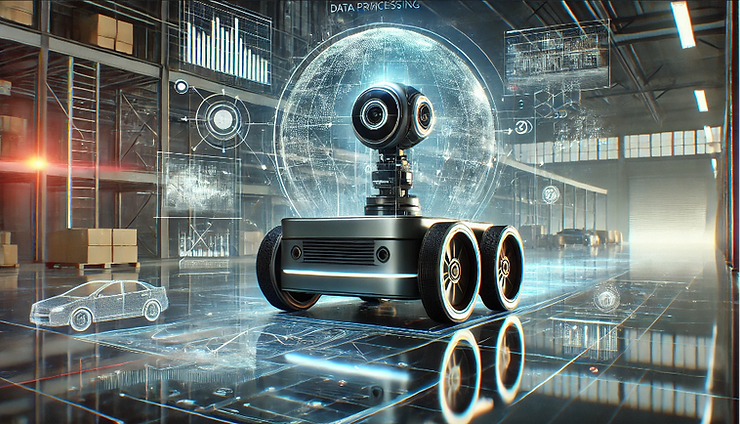

In today’s fast-paced industrial landscape, the ability of mobile machines to navigate and adapt autonomously has become a game-changer. At the heart of this capability lies perception - the systems of sensors, cameras, and advanced algorithms that enable machines to understand and interact with their surroundings in real-time. For industries, this translates into higher efficiency, greater safety, and a competitive edge in dynamic environments.

Decisions about perception technology are far more than a technical consideration—they are a strategic business choice. While it’s tempting to focus on immediate costs like sensors, the true investment lies in software development, infrastructure for deployment and calibration, and the ongoing support and maintenance that ensure reliability in real-world operations. A robust, reliable perception solution can drastically reduce the long-term costs of field engineers, downtime, and operational inefficiencies.

With tens of millions of dollars and years of development required to bring robotic solutions to market, perception is often the single largest area of investment. Making the wrong choice today—by adopting obsolete or unreliable technology—can have lasting financial and operational consequences. Forward-thinking decision-makers understand that perception isn’t just a cost; it’s the foundation of autonomous success.

Perception - The Foundation of Intelligent Autonomy

Perception is one of the most critical strategic technology decisions, as it forms the foundation of any intelligent autonomy system. It involves collecting input from sensors such as cameras, laser scanners, inertial measurement units, and ultrasonic devices, then processing this data with algorithms to create a detailed digital representation of the surroundings and the system’s interaction with them. Only after this perception is established can advanced functions, such as understanding, planning, and autonomous actions, be effectively implemented.

This is why perception technology determines the system’s overall capabilities. A perception system limited to a 2D view constrains the intelligent automation system to the same narrow perspective, while an inaccurate perception system can lead to critical errors, such as collisions with obstacles or even complete operational failure.

Robust, rich, and reliable perception is the cornerstone of advanced autonomy, enabling capabilities such as dynamic navigation, object recognition, and situational awareness. These advancements can allow systems to operate longer without human intervention, across diverse operational environments, directly boosting ROI.

Perception also significantly impacts the solution’s commissioning process, which often involves installing aids and undergoing extensive exploration, calibration, and testing. By adopting perception technologies that minimize costly installations and reduce commissioning times, organizations can significantly accelerate deployment rates. This efficiency enables more deployments to be completed with fewer resources in less time, providing a competitive edge.

However, perception is one of the most challenging technologies to perfect. Achieving the performance level required for production-ready operation in real-world environments takes years of development. As a result, many companies continue using obsolete technologies simply because they meet the minimal functional requirements.

Current State of Perception

The perception landscape in autonomous mobile robots (AMRs) is evolving, with a significant shift from first-generation to next-generation technologies. Understanding these differences is key to evaluating the current state of perception systems.

First-Generation Solutions - Traditionally, many AMRs relied on physical marks and 2D laser scanners as their primary sensing technology. These systems often incorporated simple software with separate layers for localization and obstacle detection, primarily aimed at ensuring safety through emergency stops. While reliable for basic tasks, in predictable and static environments, this approach has notable limitations:

Limited Scope: systems using 2D perception are restricted to static, flat, predicted and controlled environments significantly limiting the number of customers and automation flows they can operate in

Setup and Maintenance: Systems using 2D laser scanners or physical markers demand a lengthy, often costly initial setup and require ongoing maintenance.

Limited Information: These systems capture limited information on their surroundings and therefore cannot identify objects, detect humans, read signs, or capture ground-level details that can be used for intelligent behavior by the robot.

While these solutions meet minimal functional requirements and were successfully deployed by very large companies to automate specific flows, their rigid architecture and limited scope leaves most automation needs of majority of companies unmet.

Next-Generation Perception Platforms - modern software defined perception systems, characterized by their use of 3D information, sensor fusion, and advanced algorithms to address the challenges of dynamic and complex environments. These next-generation platforms integrate:

3D Perception: Leveraging cameras, 3D LiDAR and other sensors to create detailed spatial data and capture richer environmental information.

Sensor Fusion: Combining inputs from multiple sensors, with vision as the core data source, to provide robust and reliable perception that meets industrial grade performance levels.

Multi-Layered Integration: Enabling simultaneous localization, obstacle detection, and scene understanding through integrated software architectures where information from one layer enriches the other.

These advancements deliver key benefits:

Robustness Across Environments: Next-generation platforms operate reliably in varied and dynamic settings, from warehouses to outdoor spaces.

Deployment Efficiency: Reducing setup complexity and commissioning time accelerates deployment, improving scalability and cost efficiency.

AI-Driven Insights: By providing a deeper understanding of the environment, these systems enhance decision-making and enable more advanced autonomy capabilities.

Taking Perception to the Next Level with 3D Visual Perception

Location-aware visual perception has the potential to revolutionize how machines perceive the world. With advanced algorithms, rich data from low-cost cameras can be harnessed for tasks such as localization, mapping, obstacle detection, object recognition, tracking, and other sophisticated perception applications.

For example, a location-aware visual perception system can learn the visual features of a dynamic environment, distinguishing between static features that can be reliably used for positioning and dynamic features that should not be used as visual anchors. This adaptability makes such systems easy to deploy, eliminating the need for extensive infrastructure or lengthy shutdowns for complex mapping processes. Combined with smart object recognition, this capability also enables intelligent automation solutions to thrive in dynamic environments, such as assembly work-floors, where traditional systems struggle to operate.

Enabled by these and other advanced capabilities, visual perception unlocks new possibilities for more affordable and capable intelligent automation. It provides an elegant and robust solution, setting the foundation for the next generation of intelligent autonomous systems. After all, evolution chose vision as a primary sensing mechanism - shouldn’t we do the same for robots?

However, developing these platforms is inherently challenging. The integration of 3D data and sensor fusion requires sophisticated algorithms, processing power, and extensive development effort to ensure real-world reliability. As a result, many organizations face difficulties in transitioning from first-generation to next-generation perception systems.

The Build-vs-Buy Dilemma

Implementing visual perception is not without challenges. At its core, it usually relies on Visual Simultaneous Localization and Mapping (vSLAM) techniques. A recent Survey of Visual SLAM Methods reveals that, despite extensive academic research, most vSLAM technologies continues to struggle with dynamic environments, robustness issues, computational constraints, and scalability challenges.

When companies attempt to implement academic open source vSLAM algorithms, they often find that these solutions fail to perform reliably in real-world scenarios. At this point, some may abandon visual perception altogether, while others may opt to develop an in-house solution. Although this approach could be effective for some organizations, it shouldn’t be taken lightly.

Developing a visual perception system extends far beyond algorithms - it demands a comprehensive tool chain for calibration, monitoring, testing, updating, and supporting real-world robot fleets. This endeavor requires a multi-disciplinary team with expertise in geometric computer vision, sensor fusion, machine learning, and software development.

Organizations must also account for extended time-to-market timelines, ongoing system maintenance, and the potential risks of technological setbacks or knowledge loss due to employee turnover. Without a thorough evaluation of the development effort involved, pursuing an in-house visual perception solution can result in unforeseen challenges, escalating costs, and significant delays.

Tesla exemplifies a company that has successfully adopted a vision-led perception strategy, relying solely on vision to advance its self-driving car technology. However, most organizations lack the extensive funding and access to specialized talent that Tesla enjoys. For the majority of companies, building an in-house visual perception system is often impractical due to the substantial resources, expertise, and time it demands.

This is why we at RGo Robotics have developed the Perception Engine – an innovative learning vision system that makes visual perception easy.

The Perception Engine system is a modular software stack that, through an API, provides real-time data for localization, obstacle detection, and scene understanding. This allows companies to focus on developing their unique intelligent autonomy solutions while leveraging an advanced, adaptable, and affordable visual perception system they can deploy at no-time and rely-on.

How Companies are Leveraging the Perception Engine:

Wheel.me – is using the Perception Engine in Genius 2 - a wheel-sized AMR that can operate in diverse dynamic environments.

Capra Robotics - is using the Perception Engine in Hircus – an AMR platform that can transition smoothly across indoor and outdoor environments.

Onward Robotics – is using the Perception Engine in Lumabot – an AMR that boost picking productivity by working along-side people in dynamic aisles.

Key Questions to Ask to Get Perception Right:

First, you want to understand the potential value of advanced perception for your specific system:

Strategic Alignment – Does the perception stack align with the company’s long-term goals and planned AI/autonomy advancements?

Deployment Effort – What is the potential benefit of accelerating commissioning and reducing installation costs?

Uptime & Maintenance – How valuable is increasing system uptime and minimizing maintenance efforts?

Use-case Flexibility – What is the added value of having broader flexibility to accommodate multiple use cases?

Unit Cost – How significant is the potential to reduce the bill of materials (BOM) and overall unit costs?

If you find advanced perception valuable, the following considerations will help you decide between building or buying your perception solution:

Development Feasibility - Does the team have the necessary expertise and resources to develop and maintain an in-house perception solution?

Time to Market – What is the desired time-to-market for the intelligent automation solution, and what are the potential risks of delays?

Cost-Effectiveness - How does the total cost of developing and maintaining a perception system in-house compare to purchasing an off-the-shelf solution?

Conclusion

Perception is the cornerstone of intelligent autonomy, transforming raw sensor data into actionable insights that power advanced automation. As the demand for adaptable, efficient, and robust systems continues to grow, organizations must carefully evaluate their perception strategy to stay competitive. Whether through building in-house capabilities or leveraging cutting-edge solutions like the Perception Engine, the right perception system can unlock new possibilities, reduce operational costs, and pave the way for the next generation of intelligent automation. The choice you make today will shape your ability to thrive in the dynamic landscapes of tomorrow.

Want to learn more about RGo’s Perception Engine? Let’s Talk!

Comments